A Promessa e o Perigo da Nova Revolução Digital

A nova corrida tecnológica global levanta uma pergunta urgente: estamos prontos para confiar na inteligência que criamos?

IA é um tema com o qual que venho trabalhando e estudando há muito tempo, desde os anos 80. Confesso que sou um entusiasta da IA. E não é de hoje. Lembro que na adolescência devorava livros de Isaac Asimov, como a famosa trilogia “Fundação” e, principalmente, “Eu, robô”. “Eu, robô” foi uma série de contos que são um marco na história da ficção científica, pela introdução das célebres Leis da Robótica, e por um olhar completamente novo a respeito das máquinas. Os robôs de Asimov conquistaram a cabeça e a alma de gerações de escritores, cineastas e cientistas, sendo até hoje fonte de inspiração de tudo o que lemos e assistimos sobre eles. Depois veio o inesquecível filme de Stanley Kubrick, “2001, uma odisséia no espaço” e com ele o HAL 9000 (Heuristically programmed ALgorithmic computer), que é um computador com avançada inteligência artificial, instalado a bordo da nave espacial Discovery e responsável por todo seu funcionamento. Os diálogos dele com os atores me deixaram realmente impressionado com que o futuro poderia nos trazer. Quando li um paper sobre Eliza, software criado por pesquisadores do MIT, vi que a IA era possível sim, pois já nos anos 60 um sistema conseguia interagir de forma razoável com humanos. Comecei a ler todos os livros sobre o assunto e em meados dos anos 80, consegui aprovação para colocar em prática uma experiência, dentro da empresa na qual trabalhava.

Na época o cenário da IA estava dividido em duas linhas de pensamento, um grupo que adotava o conceito de “rule-based”, também chamado de “expert systems” ou sistemas especialistas, e o grupo que se orientava pelo conceito de redes neurais (neural networks). As redes neurais pareciam muito promissoras, mas faltavam dados e a capacidade computacional disponível era imensamente inferior à que temos hoje. Pragmaticamente optei pelos sistemas especialistas, pois a lógica de desenvolvimento me parecia mais factível: entrevistar profissionais especialistas em determinada área e codificar seus processos de decisão, em uma árvore de decisão, com IF-THEN-ELSE. Um sistema especialista tem dois componentes básicos: um motor de inferência e uma base de conhecimentos. A base de conhecimento tem os fatos e regras, e o motor de inferência aplica as regras aos fatos conhecidos e deduz novos fatos. Primeira dificuldade foi aprender linguagens como Prolog e Lisp, mas vencida a barreira, a prática de buscar o conhecimento dos especialistas foi um entrave: por serem especialistas eram muito requisitados e não tinham tempo disponível, muito menos para um projeto experimental. Além disso, era muito difícil tentar traduzir suas decisões, muitas vezes intuitivas, em regras claras para serem colocadas na árvore de decisão. E à medida que ia acumulando conhecimentos do especialista, o processo tornava-se mais e mais complexo. Em resumo, o sistema nunca funcionou adequadamente e foi descontinuado. Mas valeu a experiência.

Na última década, a IA renasceu e a ênfase foi direcionada para as redes neurais. Já temos os dois fatores essenciais: capacidade computacional disponível e abundância de dados. Em capacidade computacional, um simples smartphone tem mais poder computacional que todo o data center que NASA dispunha quando enviou o primeiro homem à Lua, em 1969. E na retaguarda deste smartphone temos nuvens computacionais com capacidades quase infinitas. Em termos de dados, geramos hoje mais de 2,5 quintilhões de bytes por dia e este número dobra rapidamente.

O ponto de inflexão das redes neurais deu-se em meados dos anos 2000 com as pesquisas de Geoffrey Hinton, que descobriu maneiras eficientes de treinar várias camadas de redes neurais. Isto permitiu o rápido avanço de algoritmos de reconhecimento de imagem e fala. Surgiu o termo “deep learning” (DL) que hoje é o motor básico dos principais avanços na área de IA. Os conceitos de DL foram a base de construção do AlphaGo, que venceu o campeão mundial de Go, um complexo jogo de estratégia oriental, e posteriormente do AlphaZero, que aprendeu sozinho, em 4 horas a jogar xadrez e vencer o software campeão mundial , o Stockfish.

O que vemos hoje? A rápida evolução da IA traz impactos tão significativos que ainda não percebemos sua amplitude. Não temos ideia de como será nossa sociedade, as empresas e o mercado de trabalho em 2050, mas sabemos que a IA e a robótica vão mudar quase todas as modalidades de trabalho atuais, transformando as carreiras e profissões como as conhecemos hoje.

Sim, a IA já está aqui. Os algoritmos já estão entre nós e se disseminam cada vez mais, se entranhando em todos os aspectos de nossa vida. Devemos nos preparar para as mudanças que um mundo recheado de algoritmos de IA trarão para a sociedade. Entretanto, a IA não precisa e nem deve ser uma equação de soma zero, humanos versus IA, mas sim humanos mais IA gerando mais inteligência. Claro, que para isso temos que nos preparar. Estudar, saber e entender o que é IA, seus potenciais e suas limitações. Muitas, aliás! IA não é mágica e tem muito, mas muito mais para evoluir. Estamos no início da curva de aprendizado.

Aqui estão uma lista de livros que abordam o tema IA e que recomendo. Li todos eles e acredito que possam contribuir em muito para conhecermos mais o que é e o que não é IA.

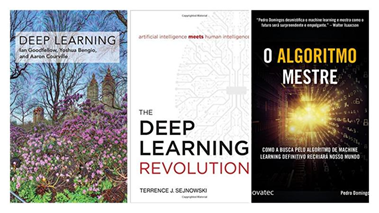

Comecemos pelo livro “Deep Learning” de Ian Goodfellow et al. É um excelente livro para conhecer e entender Deep Learning. O “Deep Learning Revolution” de Sejnowski nos mostra a evolução dos algoritmos de DL. Imperdível. O Algoritmo Mestre traça um percurso pelas cinco maiores escolas de ML, mostrando como elas transformam ideias da neurociência, evolução, psicologia, física e estatística em algoritmos.

A IA está cada vez mais se entranhando nas nossas vidas, como aconteceu com o smartphone, que hoje é praticamente um órgão do corpo humano. O livro de Asimov, “Eu, Robô” de 1950 foi um marco. O enredo segue o relato da personagem Susan Calvin, robopsicóloga que está sendo entrevistada no final da vida. Ela narra as passagens mais importantes da carreira em nove contos. A partir de casos particulares, Asimov desenha um futuro onde máquinas tomam suas próprias decisões, e a vida dos humanos é inviável sem a ajuda de seres autômatos.

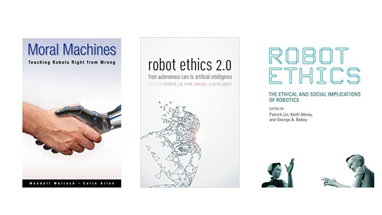

O livro também virou um clássico porque enumera as Três Leis da Robótica: 1) um robô não pode ferir um humano ou permitir que um humano sofra algum mal; 2) os robôs devem obedecer às ordens dos humanos, exceto nos casos em que tais ordens entrem em conflito com a primeira lei; e 3) um robô deve proteger sua própria existência, desde que não entre em conflito com as leis anteriores. As regras visam à paz entre autômatos e seres biológicos, impedindo rebeliões. Estas diretrizes devem ser respeitadas pelos pesquisadores de IA. A questão da ética em IA não pode e nem deve ser subestimada. Deve, por princípio, fazer parte do design de soluções. Ethics by design.

Outro dia estava pensando nos meus netos e como seria a futura vida profissional deles. Sei que eles não usam e jamais usarão teclado e mouse. E que não precisarão aprender a dirigir. A Internet, os apps e os wearables já fazem parte de sua vida e cada vez mais estarão vivendo em um mundo digital, com novos hábitos sociais e comportamentais. E não usarão mais apps, substituídos por assistentes virtuais que se comunicam via gestos e voz. Mas, a partir daí tudo passa a ser nebuloso. Como podemos nos preparar e preparar nossas crianças para um mundo com tantas transformações e incertezas radicais? Um bebê nascido hoje terá trinta e poucos anos em 2050. Se tudo correr bem, ele ainda estará vivo em 2100, e pode até ser um cidadão ativo do século 22. O que devemos ensinar a esse bebê para ajudá-lo a sobreviver e prosperar no mundo de 2050 ou do século 22? Que tipo de habilidades ele vai precisar para conseguir um emprego, entender o que está acontecendo ao seu redor e explorar o labirinto da vida?

Nós não temos respostas para estas perguntas. Nunca fomos capazes de prever o futuro com precisão, mas hoje é praticamente impossível. E a IA já está no nosso presente. Imagine o futuro! Precisamos entendê-la e a aproveitarmos em seu potencial, reconhecendo suas limitações.

Lembram-se do filme Her, onde o sistema de IA, na bela voz de Scarlett Johansson, entendia tudo que Theodore falava? Pois é…ainda estamos bem distantes deste cenário. Tem muitos bots bem evoluídos (e outros nem tanto), que dão impressão de entender o que a gente fala. Mas, quando olhamos as técnicas de deeep learning com mais atenção vemos que os sistemas atuais têm dificuldades inerentes de conseguir compreender como sentenças relacionam-se com suas partes, como palavras. É o princípio da composicionalidade. Composicionalidade é propriedade das expressões complexas cujo sentido é determinado pelos sentidos dos seus constituintes e pelas regras usadas para os combinar. Todas as teorias lingüísticas concordam que uma das principais características das línguas humanas é sua capacidade de criar expressões complexas a partir de unidades lingüísticas simples. A IA tem dificuldade de manusear composicionalidade. Também tem dificuldades de compreender a ambiguidade, que nós, humanos, colocamos nas nossas conversas. Significa que uma máquina não pode interagir conosco? Claro que não, mas não consegue manter uma conversação no nível que nós, humanos mantemos. O que falta? Senso comum. Tem alguns bons livros sobre as técnicas de NLP.

Vasculhando minha biblioteca armazenada no Kindle, que já são 404 livros, redescobri um livro que li em meados de 2011, “Final Jeopardy: Man vs. Machine and the Quest to Know Everything”, que conta a história do desenvolvimento do Watson. Comecei a relê-lo, pois agora, quase dez anos depois, com tanta evolução acontecendo de forma acelerada na IA, é bom recordar casos pioneiros. O momento em que o Watson venceu a competição de TV, Jeopardy, é um marco na história da IA. Quem se interessa pela evolução da IA e como ela chegou até aqui, deve dar uma lida neste livro.

Gostei de três livros que abordam IA sob a ótica de negócios. O primeiro “How AI is Transforming Organizations” é uma coletânea de artigos publicado pela MIT Sloan. Recomendo também “Competing in the Age of AI” da Harvard Business Review. “Rule of the Robots” argumenta que a IA é uma tecnologia excepcionalmente poderosa, um tipo de “eletricidade da inteligência” que está alterando todas as dimensões da vida humana. A IA tem o potencial de nos ajudar a combater as mudanças climáticas ou a próxima pandemia, mas também tem capacidade para causar danos profundos. Deep fakes — áudio ou vídeo gerado por IA de eventos que nunca aconteceram — podem causar estragos em toda a sociedade. A IA capacita regimes autoritários como a China a implementar mecanismos sem precedentes de controle social. E a IA pode ser profundamente tendenciosa, aprendendo atitudes preconceituosas com os dados usados para treinar algoritmos e perpetuando-os. São assuntos que precisamos compreender para que possamos usar a IA da forma mais adequada e útil para todos.

O pouco mais técnico é o livro “Data Science para Negócios”, que é um bom livro para gestores de negócios que lideram ou interagem com cientistas de dados e engenheiros de ML e que desejam compreender melhor os princípios e algoritmos disponíveis, sem entrar em detalhes técnicos.

Li e reli o excelente livro de Kai-Fu Lee, “AI Super-powers: China, Silicon Valley and the New World Order”. O livro aborda uma tese interessante, que analisa a evolução da IA sob a ótica de 4 ondas: “Internet AI”, “Business AI”, “Perception AI” e “Autonomous AI”. Cada onda alavanca a evolução da IA e provoca rupturas em setores de negócio. Recentemente, o pesquisador de IA Andrew Ng disse que “IA é a nova eletricidade”. Há cerca de um século, começamos a eletrificar o mundo através da revolução da eletricidade. Substituindo máquinas a vapor por máquinas que usam eletricidade, transformamos o transporte, a fabricação, a agricultura, a saúde e praticamente toda a sociedade. Agora, a IA está no seu ponto de inflexão, iniciando uma transformação igualmente dramática na sociedade. Agora em 2021 ele escreveu seu segundo livro, 2041, onde ele imagina o mundo daqui a 20 anos, com IA atuando de forma pervasiva e como isso impactaria a sociedade.

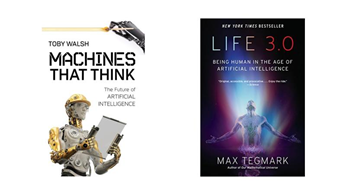

E recomendo três excelentes livros que debatem o tema da relação entre humanos e máquinas. Vale a pena lê-los!

Nos ajudam a formar opinião para tentar responder a questões como de que forma a IA afetará os crimes, as guerras, a justiça, o trabalho, a sociedade e a nossa própria noção de sermos humanos? Como podemos aumentar nossa prosperidade por meio da automação sem deixar as pessoas desprovidas de renda ou de propósito? Que conselhos sobre carreira deveríamos dar às crianças hoje em dia, para que evitem empregos que em breve serão automatizados? Como construir sistemas de IA mais robustos, de modo que eles façam o que queremos sem risco de funcionarem com defeito ou serem hackeados? Devemos temer guerras que utilizam armas letais autônomas? Será que as máquinas se tornarão mais espertas do que nós em todas as tarefas, substituindo os humanos no mercado de trabalho e talvez em todo o restante? Será que a IA ajudará a vida a florescer como nunca antes ou nos dará mais poder do que podemos encarar? Que tipo de futuro você quer?

Uma discussão instigante é o quão longe a IA poderá ir. A IA entende ou não o que está fazendo? IA não é novidade. O termo foi cunhado na década de 50, passou por altos e baixos e, agora, graças à capacidade computacional disponível e uma inundação de dados em formato digital, começa a tomar forma. Temos hoje condições de desenvolver algoritmos bastante sofisticados e uma forma específica de IA, DL, tem sido a grande aposta de sua evolução. Para muitos, DL é o atual estado da arte em IA. É verdade que algoritmos sofisticados de DL, obtendo desempenho superiores à de humanos em tarefas bem específicas faz com que consideremos a IA como super humana em todos os sentidos. Não é verdade. O que ainda temos é uma “narrow AI”, que consegue fazer uma coisa específica muito bem, mas, não tem a mínima ideia do que está fazendo. Não tem consciência e, portanto, à luz, do que consideramos inteligência humana, está ainda muito, mas muito distante de ser inteligente. As máquinas também não têm consciência. Quando o Watson venceu o “Jeopardy!” ele não saiu para comemorar com os amigos. Quando AlphaGo venceu Lee Sedol no Go, ele não teve a mínima compreensão do que fez. Cumpriu o que seus algoritmos tinham que fazer e pronto. AlphaGo não sabe fazer outra coisa a não ser jogar Go. Não sabe jogar xadrez. Isso impede que usemos IA para atividades que demandam senso comum, empatia e criatividade. Por exemplo na saúde, a máquina pode fazer bem a análise de imagens, mas como na verdade não vêem, mas simplesmente enxergam pixels, não podem substituir o médico nas interações onde os cuidados médicos demandam personalização e humanidade. Tem uma frase atribuída a Einstein que vale a pena citar aqui: “Any fool can know. The point is to understand”. Alguns livros discutem esse assunto e merecem ser lidos!

Uma questão que deve ser aprofundada: o futuro do trabalho na era da IA. Outro dia mesmo estava pensando nos meus netos e como seria a futura vida profissional deles. Sei que eles não usam e jamais usarão teclado e mouse. E que não precisarão aprender a dirigir. A Internet e os apps (e os wearables!) já fazem parte de sua vida e cada vez mais estarão vivendo em um mundo digital, com novos hábitos sociais e comportamentais. Aliás, é provável que nem usem mais apps, substituídos por assistentes virtuais que se comunicam via gestos e voz. Mas, a partir daí tudo passa a ser nebuloso. Como podemos nos preparar e preparar nossas crianças para um mundo com tantas transformações e incertezas radicais? Um bebê nascido hoje terá trinta e poucos anos em 2050. Se tudo correr bem, ele ainda estará vivo em 2100, e pode até ser um cidadão ativo do século 22. O que devemos ensinar a esse bebê para ajudá-lo a sobreviver e prosperar no mundo de 2050 ou do século 22? Que tipo de habilidades ele vai precisar para conseguir um emprego, entender o que está acontecendo ao seu redor e explorar o labirinto da vida?

Nós não temos respostas para estas perguntas. Nunca fomos capazes de prever o futuro com precisão, mas hoje é praticamente impossível. Uma vez que a tecnologia nos permite projetar corpos, cérebros e mentes, não se pode mais ter certeza sobre qualquer coisa, incluindo coisas que anteriormente pareciam estáveis e eternas. Já deixamos para trás o termo ficção científica. Torna-se mais adequado falarmos em antecipação científica, pois não será mais questão de “se” alguma coisa será inventada, mas “quando”.

Por exemplo, o acelerado avanço da automação e da IA vai mudar em muito as profissões atuais. O impacto da robotização chegando às áreas de conhecimento muda radicalmente nossa percepção sobre automação. Antes era consenso que automação afetaria apenas as atividades operacionais, como nas linhas de produção. Mas agora percebemos que podemos vê-la atuando em atividades mais mentais do que manuais, que envolvem tomadas de decisões, que tradicionalmente abrange pessoas com formação universitária e são responsáveis pelo extrato profissional considerado superior.

Parece impossível? A cada dia surgem mais evidências que esta mudança está bem mais próxima que pensamos. E breve chegará o dia em que a automação poderá substituir pessoas nas tomadas de decisões nos negócios. As máquinas poderão substituir administradores que atualmente confiam em instinto, experiência, relações e incentivos financeiros por desempenho, para tomar decisões que algumas vezes levam a resultados muito ruins. Este cenário vai nos obrigar a mudar muitas profissões e obviamente a redesenhar a formação acadêmica para enfrentar este desafio. Não estamos realmente formando as pessoas para as profissões do futuro. Mas, o que devemos fazer? Que tal começarmos a analisar com mais profundidade o tema? Alguns livros debatem o assunto com propriedade.

A IA tem potencial disruptivo muito grande e, portanto, ter uma estratégia, é essencial para enfrentar os desafios que já estão à porta. Mas, como desenhar uma estratégia de IA? A IA poderá substituir ou modificar profissões que existem hoje, além de criar outras. De um modo geral, a IA utiliza capacidades, como conhecimento, percepção, julgamento e os meios para realizar tarefas específicas, que eram de domínio exclusivo dos seres humanos. A pergunta que fazemos a nós mesmos é onde e como aplicá-las? Devemos usá-las para criar novos produtos ou ofertas? Para aumentar o desempenho dos seus produtos? Para otimizar as operações internas dos negócios? Para melhorar os processos do cliente? Para reduzir o número de funcionários? Para liberar os funcionários para serem mais criativos? As respostas virão de nossa estratégia para aplicação de IA. Não existe resposta única, pois cada organização tem sua própria estratégia e ritmo de adoção. De qualquer maneira, três pilares devem fundamentar o desenho da estratégia: o nível de conhecimento e conceituação do potencial de IA; a capacidade (talentos) disponíveis para implementar os conceitos; e a cultura da organização e sua aderência ou não a inovações e experimentações. Um primeiro passo é estudar empresas que podem ser consideradas “AI powered organizations”, aquelas em que a IA etá no cerne do seu negócio, como Amazon e Alibaba.

Um instigante e polêmico livro foi “Superintelligence: paths, dangers, strategies”, de Nick Bostrom, diretor do Future of Humanity Institute, da Universidade de Oxford, no Reino Unido. Apesar do tema aparentemente ser inóspito, ele chegou a ser um dos best sellers do New York Times. Ele debate a possibilidade, real, do advento de máquinas com superinteligência, e os benefícios e riscos associados. Ele pondera que os cientistas consideram que houveram cinco eventos de extinções em massa na história de nosso planeta, quando um grande número de espécimes desapareceu. O fim dos dinossauros, por exemplo, foi um deles, e que hoje estaríamos vivendo uma sexta, essa causada pela atividade humana. Ele pergunta, e será que nós não estaremos nessa lista? Claro existem razões exógenas como a chegada de um meteoro, mas ele se concentra em uma possibilidade que parece saída de filme de ficção científica, como o “Exterminador do Futuro”. O livro, claro, desperta polêmica e parece meio alarmista, mas suas suposições podem se tornar realidade. Alguns cientistas se posicionam a favor deste alerta, como Stephen Hawking, que disse textualmente: “The development of full artificial intelligence could spell the end of the human race”. Também Elon Musk, que é o fundador e CEO da Tesla Motors tuitou recentemente: “Worth reading Superintelligence by Bostrom. We need to be super careful with AI. Potentially more dangerous than nukes”.

Pelo lado positivo, Bostrom aponta que a criação destas máquinas pode acelerar exponencialmente o processo de descobertas científicas, abrindo novas possibilidades para a vida humana. Uma questão em aberto é se e quando tal capacidade de inteligência seria possível. Uma pesquisa feita com pesquisadores de IA, apontam que uma máquina superinteligente — Human Level Machine Intelligence (HLMI) — tem 50% de chance de aparecer em torno de 2050. Para 2100, a probabilidade é de 90%! Para outros isso é apenas um mito. Uma boa discussão.

Então, pessoal, espero que curtam esta bibliografia tanto quanto eu curti. São leituras instigantes que abrem fantásticos insights. Boa leitura para todos!

Head da Redecore, Partner/Head of Digital Transformation da Kick Corporate Ventures. Investidor e mentor de startups de IA e membro do conselho de inovação de diversas empresas. Na sua carreira foi Diretor de Novas Tecnologias Aplicadas e Chief Evangelist da IBM Brasil; e sócio-diretor e líder da prática de IT Strategy da PwC.

Também exerceu cargos técnicos e executivos em empresas como Shell e Chase Manhatttan Bank. Com educação formal diversificada, em Economia e mestrado em Ciência da Computação sempre buscou compreender e avaliar os impactos das inovações tecnológicas nas organizações e em seus processos de negócio.

Escreve constantemente sobre tecnologia da informação em sites e publicações especializadas como NeoFeed e outros, além de apresentar palestras em eventos e conferências de renome como IT Forum, IT Leaders, CIO Global Summit, TEDx, CIAB e FutureCom. É autor de onze livros que abordam assuntos como Inteligência Artificial, Transformação Digital, Inovação, Big Data e Tecnologias Emergentes. Membro notável do I2AI. Advisor da EBDI e professor convidado da Fundação Dom Cabral, da PUC-RJ e PUC-RS. Publisher da Intelligent Automation Magazine.

A nova corrida tecnológica global levanta uma pergunta urgente: estamos prontos para confiar na inteligência que criamos?

Por que dominar a IA será a nova alfabetização do século XXI

Conselhos de Administração devem evoluir da supervisão reativa para a antecipação estratégica, frente à crescente complexidade e volatilidade dos ambientes de negócios.

De 14 a 25 de julho, reserve suas manhãs das 08h00 às 09h30 para participar da tradicional Maratona I2AI! Uma jornada intensa com debates e palestras sobre temas essenciais: Ética,